Cognitive APIs. Vision. Part 2.

In part 1 of this blog series I had Microsoft’s Computer Vision analyze my avatar. Today I would like to ask Mr. Watson from IBM to do the same.

Setup

Same as last time, modern JavaScript and a modern browser.

Getting started with Watson APIs takes a few more steps but it’s still very intuitive. Once you’re all set with Bluemix account, you can provision the service you need and let it see your images.

API

IBM had two vision APIs. AlchemyVision has been recently merged with the Visual Recognition. If you use the original Alchemy endpoint, you will receive the following notice in the JSON response: THIS API FUNCTIONALITY IS DEPRECATED AND HAS BEEN MIGRATED TO WATSON VISUAL RECOGNITION. THIS API WILL BE DISABLED ON MAY 19, 2017.

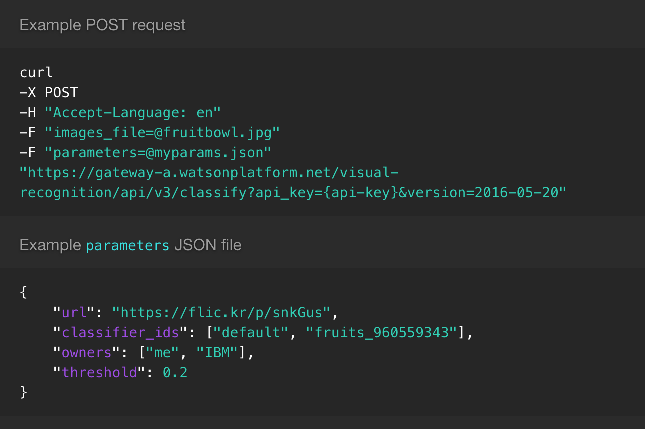

The new unified API is a little weird. Similar to the computer vision from Microsoft, it can process binary images or can go after an image by its URL. Both need to be submitted as multipart/form-data though. Here’s an exampel from the API reference:

It’s the first HTTP API that I’ve seen where I would be asked to supply JSON parameters as a file upload. You guys? Anyway. Thanks to the Blob object I can emulate multipart file upload directly from JavaScript.

Another puzzling bit is the version parameter. There’s a v3 in the URL but you also need to supply a release date of the version of the API you want to use. Trying without it gives me 400 Bad Request. There’s no version on the service instance that I provisioned so I just rolled with what’s in the API reference. It worked.

I also couldn’t use fetch with this endpoint. This time it’s not on Watson though. My browser would happily send Accept-Encoding with gzip in it and IBM’s nginx would gladly zip up the response. Chrome dev tools can deal with it but fetch apparently can’t. I get SyntaxError: Unexpected end of input at SyntaxError (native) when calling .json() on the response object.

Not sending Accept-Encoding would help but it’s one of the headers you can’t set. I had to resort to good old XHR.

And Here We Go

1 | // my avatar |

The response from Watson?

Person, 100%

Yep. That’s it. That’s all the built-in classifier could tell me. You can train your own classifier(s) but they all appear to be basic. No feature detection that would allow to describe images. I tried to see all classes in the default classifier but the discovery endpoint returns 404 for default. I guess I will have to check back later ;)

I have more computer vision APIs to try. Stay tuned!

Cognitive APIs. Vision. Part 2.