DNS as your Edge database

Modern computing has gone a long way. Elastic architectures have become commodities. With platforms like AWS and all its serverless offerings, you can build very reliable and very scalable systems. We learned to push static content very close to the end users thanks to the proliferation of CDNs. We then learned to run compute at the edge as well. One thing we still can’t really do effectively is push data to the edge.

What if I told you that you could use DNS? I didn’t come up with the idea. I’ve read about it here some time ago and when I had a problem that sounded like - “how do I get my data closer to the edge” - I remembered that blog post and I decided to try and do it.

An important caveat first. The problem I was solving is not a typical OLTP data problem. You are very unlikely to actually be able to replace a database with DNS using the approach I will present here. You can, however, deliver a fairly stable (and fairly small) dataset to the edge ang have low single to double digit milliseconds response time reading the data from anywhere in the world.

In a Nutshell

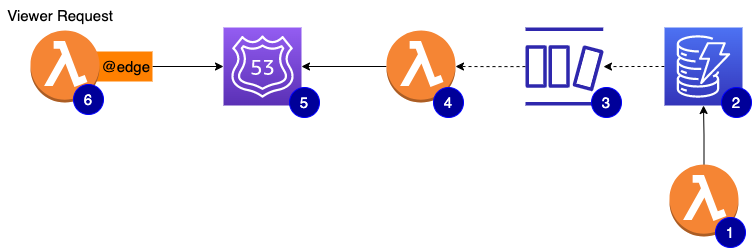

At a glance, the architecture looks like this:

- #1 is a lambda function that is basically a CRUD API used by our developer portal to provision and manage your API access

- #2 is the main DynamoDB table. The source of record for all API keys metadata

- #3 is the stream enabled on the DynamoDB table to stream out any changes

- #4 is a lambda function subscribed to the stream. Depending on the event captured, it will create, update, or delete a DNS replica using one

TXTrecord per key - #5 the

viewer-requestcan nowdigDNS TXT record to quickly check if the API key is valid and has access to the requested API

Want to know more and look at the code? Please head over to our Anywhere Engineering blog and read the full article

Till next time!